#consumer electronics #gaming #human-machine interaction #learning #storytelling #interfaces #machine learning #affective computing #ethics #industry #interactive #computer science #interfaces #learning #entertainment #art #artificial intelligence #design #creativity #history #technology #archives

Temporal Telepresence, 2025

How can we best simulate place and time?

This project created a sensory simulation designed to conjure feelings of presence in past places.

This research project investigates how immersive systems can preserve autobiographical memory by integrating tangible objects, 3D scans of autobigraphical environments, and virtual heirlooms, grounded in lived experience. The project aimed to encourage emotionally resonant linkages that evoke vivid memory recollection. Designed to support intergenerational memory sharing, the system showed promising potential for enhancing emotional well-being, strengthening familial and social bonds, and fostering a sense of telepresence, enabling communication and connection across time.

We designed a study exploring interaction with memory reconstructions using gaussian splatting paired with sensory stimuli. Using a dataset of object-specific 3D scans, home movies rendered in depth, and personal anecdotal data, we built digital twins to explore the therapeutic potential of AI-driven autobiographical simulations, with the goal of aligning an individual’s sensory experience with generative models by fusing multi-modal data. Our user study of n=16 family members showed combining sensory stimuli with gaussian splats enhanced memory vividness. Future work would lead to dynamically generated world modeling, providing users with personal multi-sensory memories on demand.

Gaussian splats of home movies were used as a way to spatialize memory networks.

Prompt based movement of avatars in highly realistic 3D recreations of past places were used to generate memory simulations in real-time.

Multi-Sensory Personal World Models

Key features of the study included the use of contemporary methods of gaussian splatting paired with photogrammetry to create high-fidelity 3D models of the home, integration of family photographs and films, and real-time rendering of dynamic environments.

Participants reported experiencing a sense of "being there," with vivid recollections of sights, sounds, and even emotions associated with the simulated environments.

Interacting with Multi-Modal Media

Interaction Scenario:

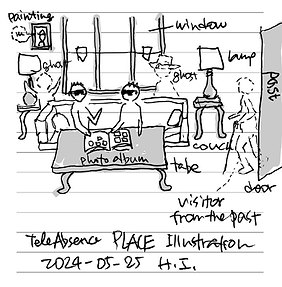

1) the experience first showed the real, present room surrounding the user- with living family members and loved ones- alongside virtual augmented reality images of the past, which transitions by movement into the second,

2) showing a virtual environment of the past mapped onto the present, with unaltered original 16mm films embedded into the environment.

Detailed feedback from participants revealed the emotional impact of interacting with these simulations.

Many described feelings of comfort and closure, particularly when revisiting spaces associated with loved ones who had passed away. These findings demonstrated the potential of such systems in therapeutic and memory support contexts.

Multi-modal fusion of Sensory Stimuli

Real objects that strengthened associations were presented to users during their immersive experiences, enabling touch, mixed reality integration of physical stimuli and virtual simulation, and the tangible linking of virtual memory and physical stimuli.

As part of a multi-year study on the concept of "TeleAbsence" at the MIT Media Lab in collaboration with Hiroshi Ishii, this project explored how we can foster and strengthen memories of the past through an aesthetics of incompleteness, illusory communications, and traces of reflection that connect individuals with past memory without fully replacing their reality.

The study was conducted in an empty home that was filled with furniture from the original home, to create a physical envioronment that recalled elements of texture, scent, and the physical environment of the original place. Left shows the home before the temporary installation was installed- Right shows the installation used for the study.

Prompt based movement of avatars in highly realistic 3D recreations of past places were used to generate memory simulations in real-time.

360 images of the original home were used to support building the world model to enable generative interactions within the environment.

Hundreds of meticulous 3D scans of each object and space in the home were retopologized at a resolution that retained their original shape using a custom workflow to preserve detail while enabling fast loading.

Experience on Demand

The image sequence above demonstrates the transition from the present state of the space to the past state, where photographs incite momory and the mixed reality environment eventualy fills the viewer's perception.